What AI Can Do for Progress Monitoring

It’s time to get our geek on! The Reconstruct founders have published more than 100 scientific articles in computer vision, artificial intelligence, and applications to construction management. Our research is the backbone of Reconstruct’s patented technologies and has been recognized with more than 10,000 citations and many awards. Our ultimate goal is to fully automate construction progress monitoring so that builders can focus on getting the job done. Here, we talk about a few ideas to make that happen.

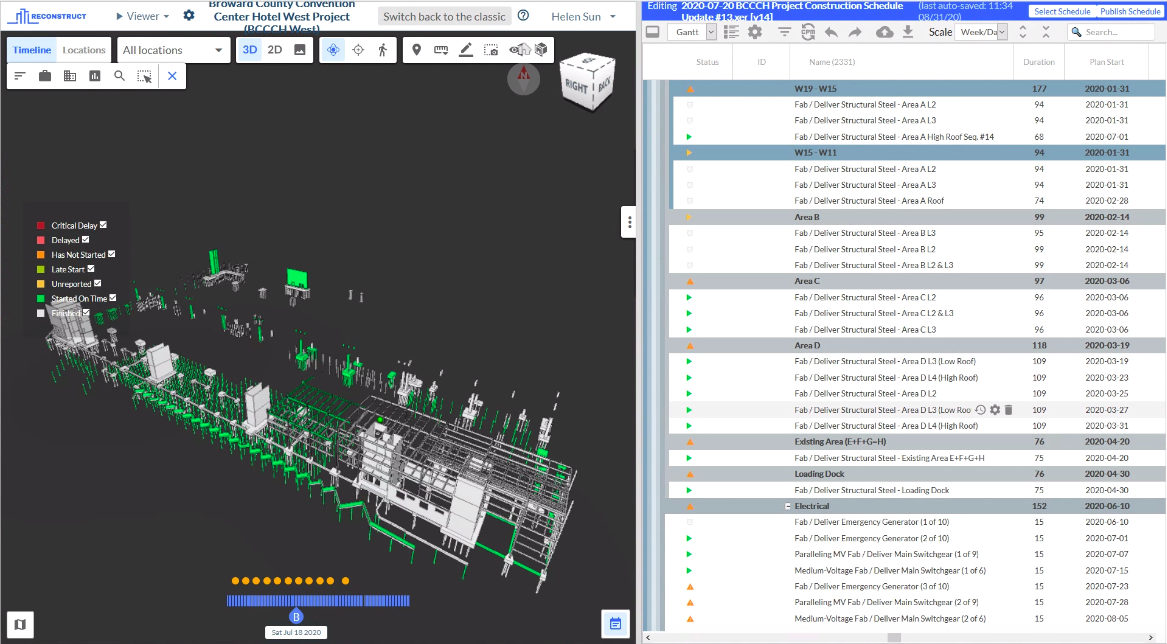

Plans always change, so progress monitoring is crucial for construction teams to take corrective action. But monitoring congested and quickly changing construction sites is a massively complex problem. Automated progress monitoring requires getting the right visual data, aligning to BIM and plans, and verifying which elements have been put in place.

Visual Data Capture

Reconstruct incorporates images from drones, smart phones, 360 cameras, and time lapse, as well as laser scans, into our 4D reality models. These are all good capture solutions, but it would be great if we could automatically capture photos to improve frequency and reliability.

Towards this end, we've built a prototype ground robot for indoor 3D mapping (see video above). The robot can learn to cover a designated path while avoiding people and other obstacles and taking a 360 video. It works well, but can be foiled by a two-by-four left blocking the hallway, so for now our indoor solution uses progress photos and videos captured by humans with Reconstruct Field.

For areas that are hard to access, we've also designed a way for a drone to autonomously place a camera onto a steel surface, such as a wide flange beam, as shown in the video above. The drone picks up and drops off the camera using a cam mechanism, similar to the clicker of a ball-point pen. The camera sticks to the steel surface using an attached magnet. This could be a good way to put wireless low power time-lapse cameras all over the construction site for continuous capture.

Aligning Reality to Plans

The next step in progress monitoring is to align photos and point clouds to building information models (BIM) that specify the geometry and material of each element to be constructed.

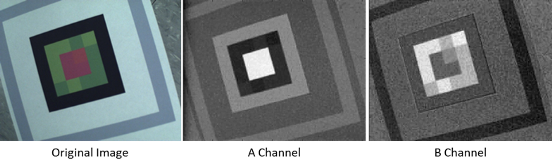

One way to precisely align is to place markers, or tags, around the site. We've designed 'ChromaTag' (above) that can be detected more accurately and 10x to 50x faster than existing markers. The key is to encode the pattern for detection and identification within the "A" and "B" channels of the CIE L*a*b color space. Because the colors aren't often seen next to each other in natural scenes, the tag can be detected very quickly, while detectors for colorless tags have to spend a lot of time sorting through the more common high-contrast regular regions. We also designed the tag detection system to look only for differences, making it robust to variations in lighting and printing. As a result, the tag can be detected at 925 frames per second (30x real time!) with virtually no misidentified tags. Using these markers, we can align photos captured indoors to building plans, even in repeated structures such as in hotels, with detection algorithms built into smartphones and cameras to ensure completeness during capture.

Identifying Construction Elements

Finally, with reality captured and aligned to plans, we need to determine which elements have actually been put in place.

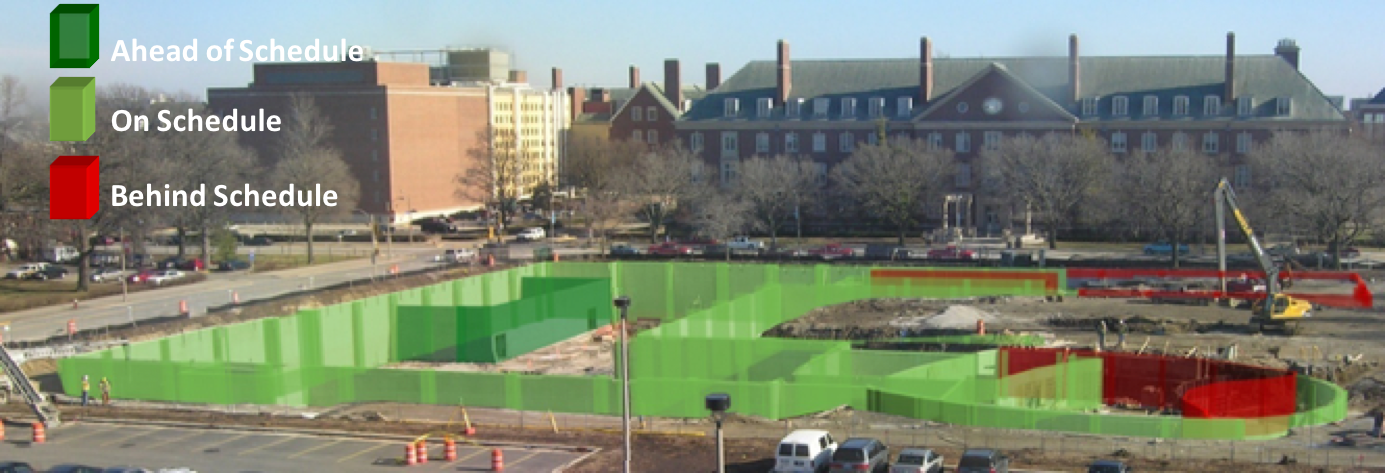

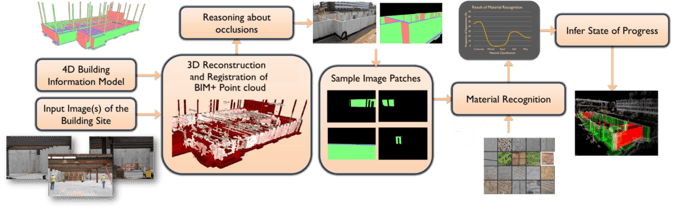

Above, we diagram an overview of our approach to measure progress. When elements are fully visible, it is easy to compare the geometry from our reality model to the BIM specifications. The challenges are that sometimes elements such as multilayer walls are constructed over time with varying materials and that many elements are not fully visible in the reality capture.

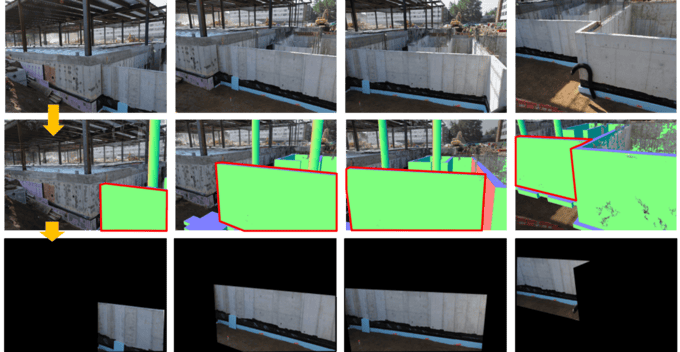

To address the challenge of layered elements, we created an algorithm that learns to identify materials based on image features and 3D surfaces produced by photogrammetry (above). The image features are created using deep learning, or convolutional neural networks, a technique that has recently revolutionized pattern recognition. Materials are sometimes difficult to identify based on either appearance or texture, but we find that using both together provides significant improvements, reducing error by about 15%.

To determine if a BIM element is in place, we can analyze its similarity to the 3D points and backproject its surface to images, as shown above. Then, we can classify whether the shape and material of each element is present in the as-built model. We also analyze constraints in the schedule and physical connectivity of elements to help infer whether occluded elements have been put in place.

When will automated progress monitoring be ready for the field?

We clearly have all the pieces - autonomous data capture, markers for alignment, material and shape recognition, analysis of schedule and structural constraints, and a plan to put it all together. But as leaders in technology, we understand the limitations, as well as the potential, of what is possible with today's AI technology. It will still be a few years before technical and regulatory hurdles are sufficiently overcome for daredevil drones and roving robots to automatically update a construction manager's progress dashboard in real-time. Meanwhile, at Reconstruct, we are committed to streamlining the process by gradually increasing automation and integrating non-automated pieces into standard workflows such as field reports. So, using today's AI technology, Reconstruct already makes it easy for owners, managers, and sub-contractors to stay up-to-date on progress.

References

Below are some of the technical papers that describe the original research discussed here. Golparvar-Fard is CEO, Hoiem is CTO, and Bretl, DeGol, Han, and Lin are co-founders of Reconstruct.

A Passive Mechanism for Relocating Payloads with a Quadrotor. J. DeGol, D. Hanley, N. Aghasadeghi, T. Bretl. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). 2015.

ChromaTag: A Colored Marker and Fast Detection Algorithm. J. DeGol, T. Bretl, and D. Hoiem. IEEE International Conference on Computer Vision (ICCV). 2017.

Integrated sequential as-built and as-planned representation with D4AR tools in support of decision-making tasks in the AEC/FM industry. M. Golparvar-Fard, F. Peña-Mora, F., and S. Savarese. Journal of Construction Engineering and Management. 2011.

Appearance-based Material Classification for Monitoring of Operation-level Construction Progress using 4D BIM and Site Photologs. K. Han and M. Golparvar-Fard. Automation in Construction. 2015.

Geometry-Informed Material Recognition. J. DeGol, M. Golparvar-Fard, D. Hoiem, IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2016.

D4AR-a 4-dimensional augmented reality model for automating construction progress monitoring data collection, processing and communication. M. Golparvar-Fard, F. Peña-Mora, and S. Savarese. Journal of information technology in construction. 2009.

Visualization of construction progress monitoring with 4D simulation model overlaid on time-lapsed photographs. M. Golparvar-Fard, F. Peña-Mora, C.A. Arboleda, and S. Lee. Journal of Computing in Civil Engineering. 2009.

Automated progress monitoring using unordered daily construction photographs and IFC-based building information models. M. Golparvar-Fard, F. Peña-Mora, and S. Savarese. Journal of Computing in Civil Engineering, 2012.

Formalized knowledge of construction sequencing for visual monitoring of work-in-progress via incomplete point clouds and low-LoD 4D BIMs. K. Han, D. Cline, and M. Golparvar-Fard. Advanced Engineering Informatics. 2015.

Related Posts

Gingerbread Coffee Shop

The 2021 Reconstruct Gingerbread "house": the Gingerbread Coffee Shop!

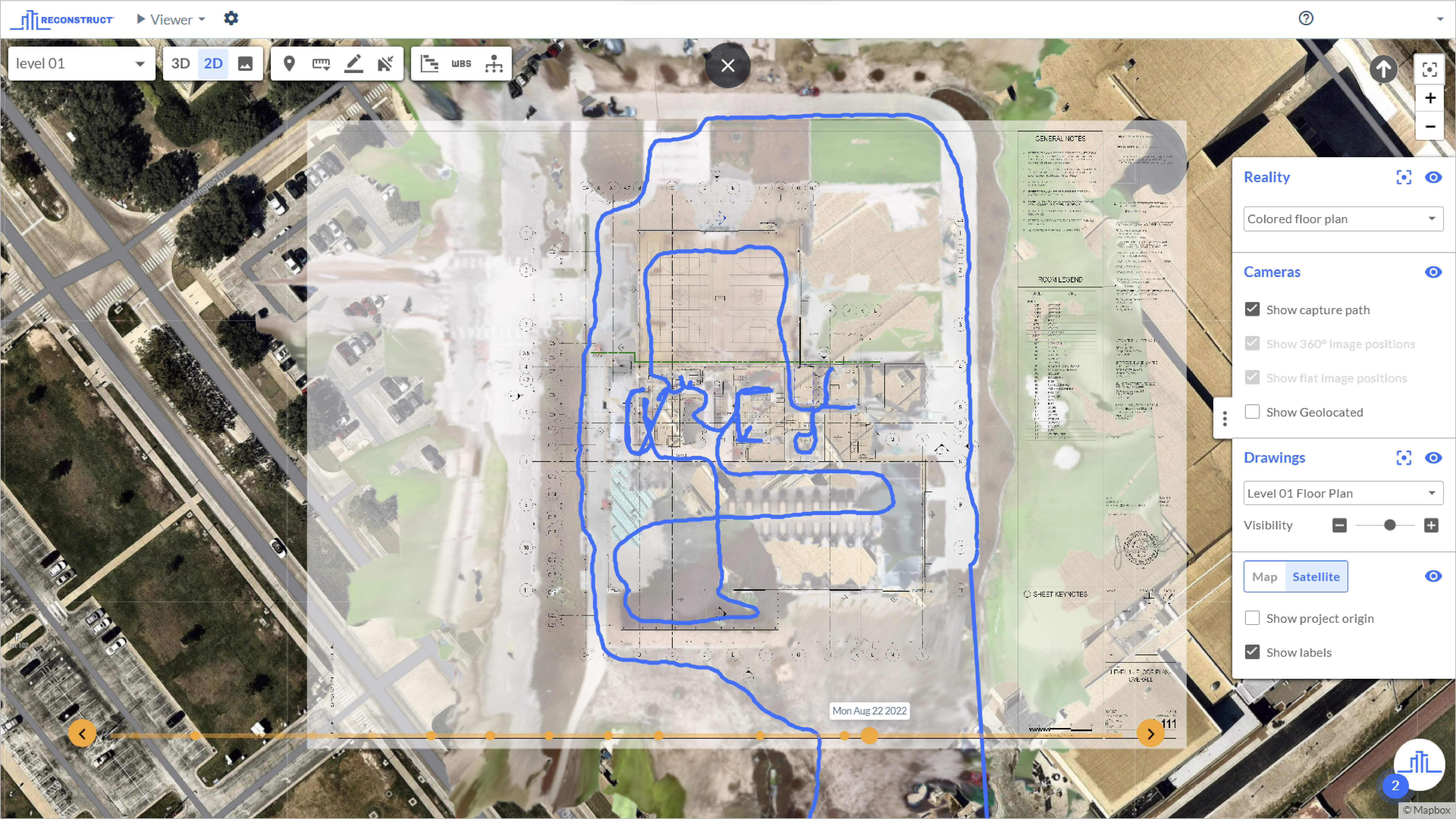

Geo-Referenced Reality Capture for Construction Stakeholders

For geo-referenced reality capture of construction projects, the right reality mapping engine can now align 360 and smartphone footage to satellite maps.

How Reality Mapping Can Fill in the Gaps of 4D BIM

4D BIM is great for modeling a structure’s final design. For temporary conditions, fast and intuitive 4D reality mapping fills in the gaps.